Counterfeit Sciences

Virology

The coronavirus hoax was a tremendous learning opportunity, especially among truthseekers. In order to determine what was going on around me at the time, I needed to study viruses and the principles they are predicated upon. What I found was completely astonishing…

Around the time when I began to explore germ theory for the first time in my life I came across a researcher named Mike Stone in 2022, the sole operator of viroliegy.com.

Below I have re-posted the introduction to his work. I hope you enjoy it as much as I do. There is nothing quite like it.

Intro to ViroLIEgy

Version 2.0

When I first set out to create ViroLIEgy.com, my goal—beyond providing a place to share and preserve my research—was to build a one-stop site where people could easily access information on the fraud of virology. As I began publishing articles and structuring the site, I aimed to categorize topics in a way that made navigation simple and intuitive. While this approach was effective, something essential was missing—something beyond organization. The site needed a reader-friendly introduction that clearly laid out the key components of the “No Virus” argument.

I have Dr. Mark Bailey to thank for pointing out this oversight. In early 2022, he suggested that I create an introductory piece to guide newcomers through the fundamental issues with virology. It was a brilliant suggestion, and since its publication in April 2022, this introduction has served as a critical resource for those unfamiliar with the topic.

Initially, my focus was strictly on virology, and the introduction reflected that. I addressed how virology fails as a science, highlighting issues such as:

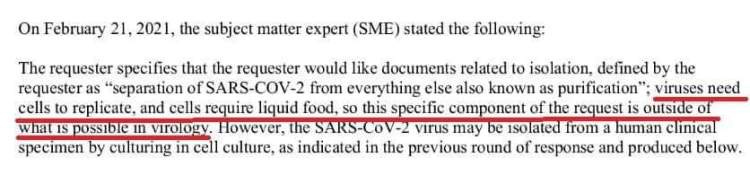

- The lack of purification and isolation of the particles claimed to be “viruses”

- The pseudoscientific nature of cell culture experiments

- The importance of satisfying Koch’s Postulates

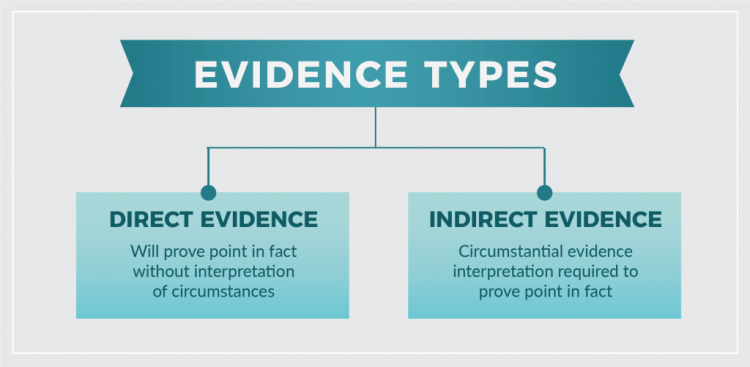

- The reliance on indirect evidence (e.g., cell cultures, electron microscopy images, “antibodies,” and genomes)

However, as I delved deeper into the origins of virology, it became increasingly clear that the very concept of a “virus” arose from the failure of germ “theory” to identify bacterial culprits for disease. To fully grasp the argument against virology, one must first understand the fundamental flaws in germ “theory”—the shaky foundation from which virology emerged.

Over the past three years, I have dedicated significant time to investigating the roots of both germ “theory” and virology, while also refining what constitutes scientific evidence and what would be necessary to meet those standards. Given this deeper understanding, I believe it is essential to update the Introduction to ViroLIEgy page to better reflect the current state of the argument.

This update will not only refine and expand upon the original sections but will also provide:

- A clearer connection between the failures of germ “theory” and the rise of virology.

- More links to relevant research and resources.

- A presentation that is more accessible and compelling for those new to the subject.

While I do not claim to speak for everyone who supports this position, this revised introduction represents what I believe are the most critical and well-supported arguments. My hope is that it will serve as a clear, digestible, and persuasive entry point for those seeking to understand—or challenge—virology.

The History of ViroLIEgy

To truly understand the flaws in virology, one must begin with the origins of germ “theory”—a concept that, despite widespread acceptance, was never scientifically validated as a proper theory should be. Popularized by Louis Pasteur, a French chemist driven more by ambition than objectivity, germ “theory” emerged in the mid-to-late 19th century. Prior to Pasteur, disease was commonly explained by the miasma theory, which held that illnesses like cholera and the plague were caused by “bad air” or foul vapors from decaying matter. Backed by Napoleon III’s government and borrowing heavily from the work of others, Pasteur rejected this idea and proposed his own: that invisible microbes—specifically bacteria and fungi—were the cause of disease and could spread from person to person.

This narrative proved politically and economically useful. Germ “theory” offered a simple, external cause of illness—one that conveniently sidestepped deeper systemic issues like poor sanitation, malnutrition, and overcrowded living conditions. By shifting the focus to microbial “invaders,” governments could deflect responsibility while appearing proactive. For industrialists, it opened lucrative markets for antiseptics, pharmaceuticals, and vaccines. Military planners, too, saw potential in a model of disease that could be “controlled” or weaponized. These overlapping interests aligned to elevate Pasteur’s work, securing him the scientific prestige and financial reward he desired—despite the fact that his methods often fell short of scientific rigor.

Indeed, Pasteur’s success was built more on manipulation than merit. As The Economist noted in 1995, he misrepresented his findings to “marginalize opponents and to gain public confidence, private sponsorship, and scientific prestige.” That same year, The New York Times reported that Pasteur had “lied about his research, stole ideas from a competitor, and was deceitful in ways that would now be regarded as scientific misconduct.” His experiments on chicken cholera, anthrax, and rabies consistently failed to meet the standards of his time. Rather than revise his conclusions, Pasteur selectively manipulated results to fit his assumptions—an approach that would become a hallmark of virology.

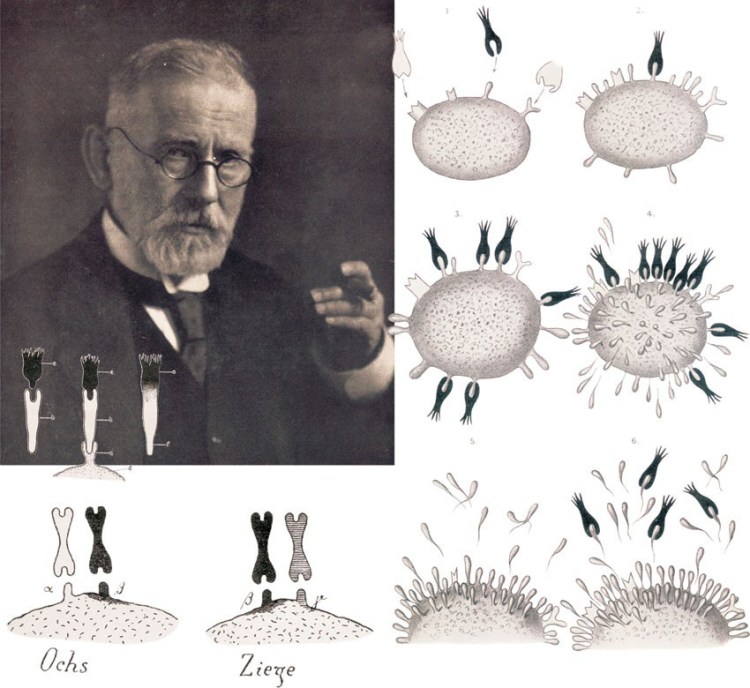

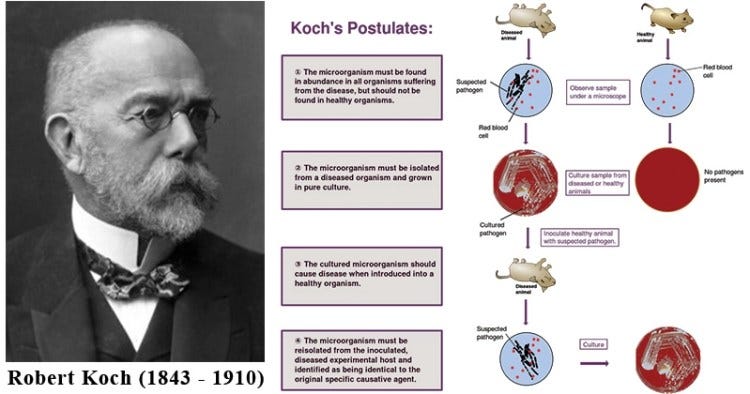

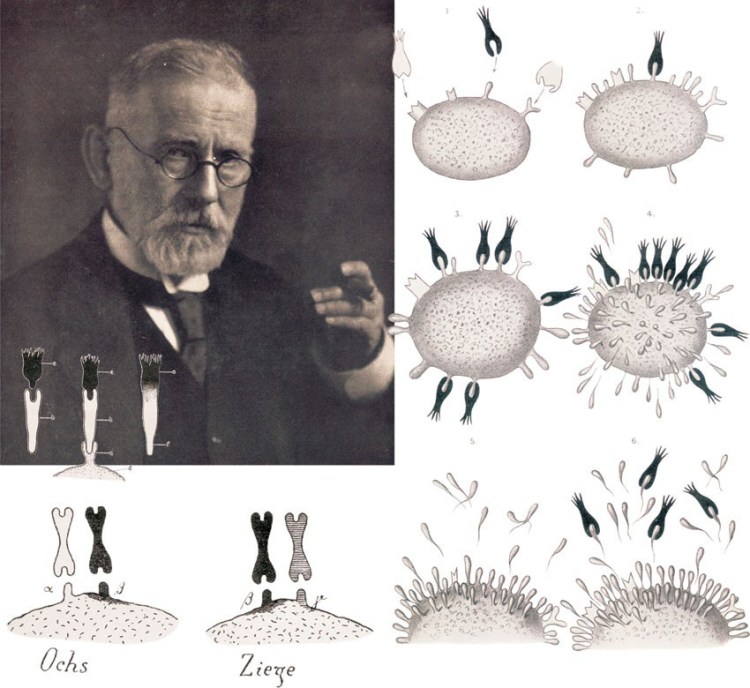

Ironically, germ “theory” gained further traction through one of Pasteur’s fiercest critics: German bacteriologist Robert Koch. The two were often at odds, with Koch accusing Pasteur of sloppy methods, including the use of impure materials and inappropriate test animals. Yet Koch, too, fell into many of the same methodological traps. In an effort to bring logic and structure to microbial causation, he introduced what came to be known as Koch’s Postulates—a set of criteria meant to prove a microbe causes disease (discussed further later). Though similar principles existed prior, Koch’s formulation became the gold standard by which diseases like anthrax and tuberculosis were officially attributed to bacteria, transforming germ “theory” from hypothesis to supposed scientific truth.

In the decades that followed, countless diseases were declared to be microbial in origin based on dubious claims that Koch’s Postulates had been fulfilled. Even Koch himself failed to meet his own standards and presented findings that contradicted germ “theory.” His work with the bacterium Vibrio cholerae is a glaring example: it was declared the cause of cholera despite failing three of the four Postulates.

Notably, many respected critics throughout history—not fringe voices, but leaders in their fields—challenged the germ “theory,” pointing out that neither Pasteur, Koch, nor their followers ever produced rigorous, causal evidence that germs actually cause disease. French chemist Antoine Béchamp observed, “The public do not know whether this is true; they do not even know what a microbe is, but they take it on the word of the master.” Rudolf Virchow, one of the most esteemed physicians of the 19th century, rejected Pasteur’s hypothesis and maintained that disease originates from internal cellular breakdown—not invading microbes.

Florence Nightingale, the founder of modern nursing, concluded from her experience with smallpox patients that disease was not “caught” from others but developed internally. Renowned medical scholar Dr. Charles Creighton—considered the most learned British medical author of his time—rejected both germ “theory” and vaccination, a stance he held until his death in 1927.

Similar skepticism came from D.D. Palmer, founder of chiropractic, and his son B.J. Palmer, who famously stated, “If the germ theory were true, no one would be alive to believe it.” Cancer researcher, surgeon, and medical writer Dr. Herbert Snow argued there had “never been anything approaching scientific proof of the causal association of micro-organisms with disease,” and that, in many cases, the evidence flatly contradicted such claims.

Dr. Walter Hadwen, once called “one of the most distinguished physicians in the world,” maintained that germs are the result , not the cause , of disease. “Nobody has yet been able to prove that there is a disease germ in existence,” he said. “In 25 percent of diseases supposed to be caused by these germs, you can’t find these germs at all.”

Some went beyond criticism. Physicians like Dr. Thomas Powell and Dr. John Bell Fraser ingested cultures of supposedly deadly germs—including diphtheria and typhoid—with no ill effects. Dr. Emmanuel Edward Klein, skeptical of Koch’s cholera claims, drank a wineglass of Vibrio cholerae and remained healthy. Max von Pettenkofer, a leading cholera expert, did the same and suffered no harm. These men weren’t merely theorizing—they were risking their lives to expose the fragility of a hypothesis masquerading as fact.

But their dissenting voices were ultimately marginalized. Germ “theory” provided a powerful narrative—one that served industrialists like the Rockefellers, Rothschilds, and Carnegies. By blaming disease on invisible, unprovable microbes, attention was diverted from unsanitary living conditions, environmental pollution, and toxic industrial byproducts. Disease was depoliticized, rebranded as an individual problem rather than a systemic failure.

These elites leveraged their immense wealth to take control of medicine, funding institutions, universities, and public health agencies that promoted the germ narrative. Simultaneously, they discredited and dismantled naturopathic, homeopathic, and holistic healing traditions that emphasized terrain theory and internal balance. What was presented as medical “progress” was, in reality, a carefully engineered paradigm shift—one that prioritized profit over public well-being.

As research continued, it became clear that the presence of bacteria alongside disease was not proof of causation. In his 1905 Nobel Prize presentation for Koch, Professor Count K.A.H. Mörner conceded that while there were “good grounds for supposing” microbial involvement in some diseases, the experimental findings were “very divergent.” Some researchers found bacteria; others did not. The same bacteria appeared in different diseases, and different bacteria appeared in the same disease. At times, bacterial presence varied dramatically in appearance, casting doubt on whether they were cause, effect, or irrelevant altogether.

More troubling still, disease was sometimes induced in experimental animals using inoculums devoid of any detectable bacteria. This presented researchers with a dilemma: admit that the disease might be caused by the experimental procedure itself—or propose an even smaller, invisible culprit.

Rather than confront the mounting contradictions, researchers invented the concept of a filterable “virus.” This idea wasn’t born from direct observation or rigorous experimentation, but from the need to salvage a failing theory. Invisible “pathogens” filled the gaps left by failed bacterial causation, allowing germ “theory” to persist in the absence of proof.

Thus, virology emerged—not as a science built on demonstrable causality, but as an extension of a collapsing hypothesis. It offered a convenient explanation for disease when neither bacteria nor environmental factors could be blamed. But its foundation, like that of germ “theory” itself, was built not on evidence—but on assumption, omission, and the manipulation of public belief.

The “Virus” Concept

“You go through narrow-pored clay filters, which hold back all bacteria, easily pass through and you have they are not yet visible with the best microscopes, including the ultramicroscope can do. We must infer their existence because they represent various human, animal and plant diseases. It’s a very special strange fact that we are dealing with these microorganisms that are completely invisible to us can operate in exactly the same way as with pure cultures of bacteria.”

-Robert Koch

https://tinyurl.com/yth9wx87

The above quote was taken from one of Robert Koch’s final speeches, the inaugural address at the Academy of Sciences on July 1, 1909. In it, he acknowledged that, unlike bacteria, “viruses” must be inferred as these entities could not be directly observed, even with the best available microscopes at the time. The “virus” was simply a concept that lacked empirical proof. This quote reveals a significant leap in reasoning, where the existence of invisible “pathogens” was asserted based on inference rather than direct evidence. This type of thinking laid the groundwork for virology, where indirect methods became the standard for identifying supposed “viral” agents, without meeting the rigorous standards of proof required in other fields of science.

As noted by Field’s Virology, it was when Koch’s logical “rules broke down and failed to yield a causative agent that the concept of a virus was born.” The creation of the “virus” concept is most often attributed to three men: Dmitri Ivanovski and Martinus Beijerinck for the tobacco mosaic “virus” (TMV) in plants, and Koch’s pupil Friedrich Loeffler for foot-and-mouth disease “virus” (FMD) in animals. As these researchers were able to induce disease in filtered solutions that contained no bacteria, they ultimately concluded that there must be an invisible agent smaller than bacteria that slipped pass the filters and caused disease when inoculated into healthy plants and animals. They concluded this despite using invasive and unnatural methods that damaged healthy subjects to induce disease.

According to biochemist and historian of science Ton van Helvoort’s 1996 paper When Did Virology Start?, the early concept of the “filterable virus” was ambiguous and uncertain. In fact, the connection between the study of filterable “viruses” and bacteriology was so strong that these unseen entities were initially considered just another form of bacteria rather than a distinct category. This aligned with Robert Koch’s assumption that these invisible agents “operate in exactly the same way as with pure cultures of bacteria.”

William Summers, a retired professor of Therapeutic Radiology, Molecular Biophysics & Biochemistry, and History of Medicine, echoed this sentiment, stating, “The concept of a virus has not been stable and has evolved since its introduction in the latter half of the nineteenth century.” He noted that the definition of “virus” was continually reworked, shaped more by technological advancements than by scientific understanding.

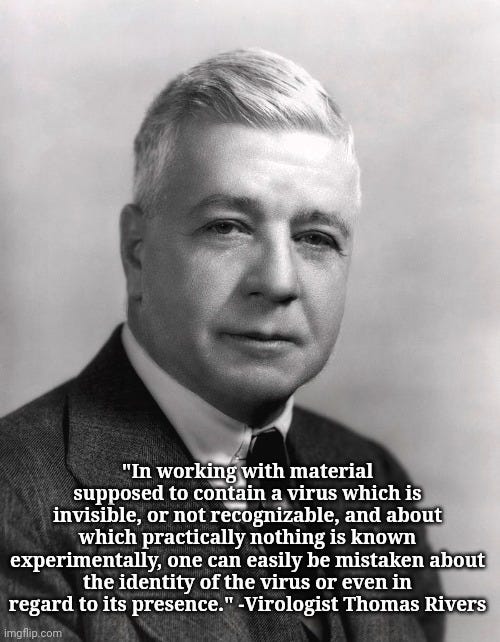

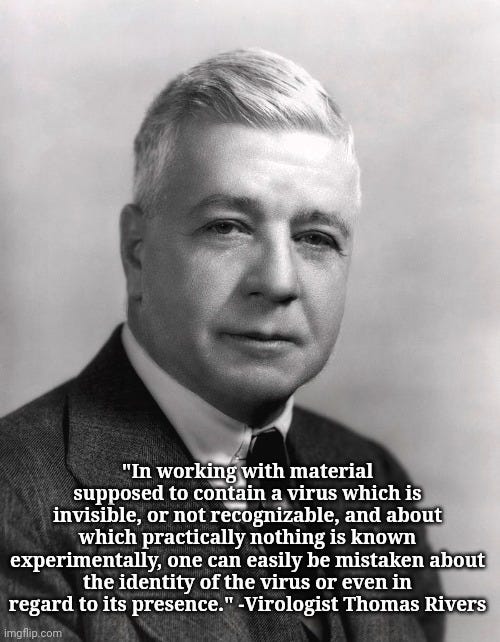

Virologist Thomas Rivers further highlighted this uncertainty in 1932, acknowledging that “viruses” were defined primarily in negative terms—by what they were not, rather than by what they were. Their defining characteristics included:

- Being invisible to standard microscopic methods.

- Passing through filters designed to retain bacteria.

- Requiring living cells for propagation.

Despite an abundance of data, Rivers admitted that much of it was unreliable, plagued by contradictions and poor-quality research. While estimates existed regarding the supposed size of “viral” particles, he cautioned that none could be accepted without reservation and that the exact size of any “virus” remained unknown. He attributed the “numerous discordant results” in the literature—particularly on filterability, size, and visibility—to inadequate experimentation, flawed reasoning, bias, and imperfect methods. Rivers also acknowledged that purified “viruses” were essential for proper study, yet no “virus” had ever been obtained in an absolutely pure state.

At the time, theories about the nature of these invisible entities varied widely. Some interpretations of “viruses” included:

- Living contagious fluids

- Oxidizing enzymes

- Protozoan parasites

- Inanimate chemical substances

- Minute living organisms related to bacteria

- A nucleoprotein toxin

Interestingly, nearly a decade earlier in 1923, Rivers had admitted that when working with material “supposed to contain a virus which is invisible, or not recognizable, and about which practically nothing is known experimentally, one can easily be mistaken about the identity of the virus or even in regard to its presence.”

This persistent uncertainty in defining and isolating “viruses” underscores the fundamental issues at the core of virology—a field shaped more by shifting assumptions than by definitive scientific evidence. Unlike bacteria, which could be grown in pure cultures, studied in a living state under light microscopes, and fully characterized, “viruses” remained unobservable and unobtainable, their existence inferred only through assumptions and indirect evidence.

The uncertainty was further highlighted in Karlheinz Lütke’s 1999 paper The History of Early Virus Research, which explained that the concept of the filterable “virus” was never universally defined in a way that all researchers could agree upon. Clashing interpretations and contradictory experimental results made it impossible to establish definitive facts. Any claim presented as a “fact” could just as easily be dismissed as fiction by opponents.

Lütke described how researchers struggled to replicate each other’s experiments, often obtaining completely contradictory findings. This led to the conclusion that previous research must have been flawed. Decades of evidence failed to yield a consistent definition of a “virus,” as scientists could not even agree on what a “virus” fundamentally was. As he put it:

“Very different interpretations of the nature of this phenomenon arose, which were put forward against each other. No experimental evidence for this or that concept, which all researchers should have accepted, could be presented by any side. In other words, the decision as to whether this or that explanation most accurately expresses the “true” nature of the virus could not be “objectified” empirically. Every version of the interpretation of the phenomenon remained open to attack, facts presented to the expert public could often be reinterpreted into fictions by opponents, who brought into play the dependence of the findings on the conditions of observation, the local situation of the experiments, the research-related nature of the attributions of characteristics, etc. as sources of error. For example, findings often reported by certain virus researchers at the time were not confirmed by other researchers as a result of their own experiments, or the observations could not be reproduced by all scientists working with the virus. Often, findings to the contrary were reported, or the findings that had been examined were considered artefacts. As with justification, reasons of various kinds could be invoked to reject the positions debated. Findings that were used to empirically confirm a suspected connection were often soon joined by negative findings reported by other researchers. However carefully and deliberately the techniques used in the experiments were employed, and despite the fact that each party could offer credible reasons for defending their respective positions and provide empirical evidence – which explains why “the various opponents ‘constructed’ widely diverging research objects which they identified as the ‘virus’” (van Helvoort 1994a: 202) – at no time did they offer compelling reasons that would have led the other party to finally abandon artifact accusations.”

Although “viruses” were hypothesized to exist in 1892, it was not until six decades later in 1957 that virologists settled on a standardized definition—a consensus largely manufactured by French microbiologist André Lwoff. While working at the Institut Pasteur in Paris, Lwoff took it upon himself to define a “virus” at the 24th meeting of the Society for General Microbiology. At the time, there were three competing perspectives: “viruses” were either microorganisms, mere chemical molecules, or something in between. Drawing on the indirect evidence accumulated up to that point, Lwoff synthesized existing data and ideas, ultimately basing his definition on bacteriophages—the so-called “bacteria eaters.”

Bacteriophages were first described in 1915 by Frederick William Twort, who did not consider them to be “pathogenic viruses.” Instead, he proposed that what he had discovered was an enzyme secreted by bacteria, initiating an endogenous process rather than an invasion by an external entity. However, French microbiologist Félix d’Hérelle took a different view in 1917. He claimed that bacteriophages were “invisible microbes” that actively destroyed “pathogenic” bacteria, dubbing them “microbes of immunity” and classifying them as obligate “viruses” of bacteria.

According to the Royal Society’s obituary of Twort, d’Hérelle “boldly alleged” bacteriophages to be “infectious viruses” and “spent the whole of his life forcing his views on his contemporaries.” This sparked a heated debate spanning decades: were bacteriophages “viruses,” autocatalytic enzymes, a filtrable stage in a complex life cycle of a bacterium, or something else entirely? Despite this unsettled controversy, Lwoff’s definition of “viruses” was heavily influenced by the bacteriophage model, effectively shaping the foundation of modern virology. As noted by Van Helvoort, this definition, while controversial, was used to legitimize virology as a scientific field:

“At the very time when the “modern concept of virus” was being formulated, there were already arguments opposing the newly formed concept of virus. Nevertheless, the definition that was proposed by Lwoff is the definition that still functions today to legitimize the existence of virology as an independent scientific domain.”

By anchoring the definition of “viruses” to bacteriophages, Lwoff committed a reification fallacy—treating an abstract and unsettled concept as if it were a concrete, established reality. In doing so, he reinforced the assumption that “viruses” functioned similarly to bacteriophages, despite the ongoing debate over the true nature of both.

Bacteriophages became the model for “infectious viruses” based on bacterial culture lysis—yet lysis is admitted to occur without any “virus” present. Early microbiologists observed this lysis frequently but called it autolysis, not “infection.” No one proposed “infectious agents.” Rather than investigate a new cause, researchers assumed methodological error and simply repeated the experiment. They didn’t view it as a biological phenomenon requiring explanation. Phage theory wasn’t drawn directly from those observations—it was imposed later as reinterpretation.

Lwoff’s conceptual leap transformed an uncertain pseudoscientific hypothesis into a rigid framework, solidifying the “infectious,” exogenous model of “viruses” while marginalizing alternative interpretations. Yet as bacteriophages were not definitively understood, how solid was the claim that “viruses,” as a whole, were “infectious” disease agents? By Lwoff’s own admission, the “virus” was a concept—something conceived in the mind—and he sought to impose a unified definition, stating that he “attempted to visualize the virus as a whole, to introduce a general idea, the notion or concept of virus.” His conclusion was ultimately circular: “viruses should be considered as viruses because viruses are viruses.” Lwoff’s reliance on flawed reasoning and a disputed model meant that virology’s foundations rested on assumption rather than empirical certainty, creating an illusion of scientific consensus where genuine uncertainty remained.

Is Virology A Science?

Science: knowledge or a system of knowledge covering general truths or the operation of general laws especially as obtained and tested through scientific method .

Pseudoscience: Theories, ideas, or explanations that are represented as scientific but that are not derived from science or the scientific method.

A brief look at the history of virology reveals that the “virus” was originally a concept devised to explain the failure to satisfy Koch’s Postulates in linking bacteria to disease. Germ “theory” required a new scapegoat to account for the overwhelming contradictions falsifying the bacterial disease hypothesis. The perfect solution? An invisible “virus”—an entity that supposedly operated beyond observable natural laws and conveniently evaded the criteria of Koch’s Postulates

The “virus” has remained a mere concept, lacking direct empirical evidence to support its hypothesized role in disease causation. In fact, conflicting and contradictory indirect evidence threatened to collapse the concept altogether. Until the 1950s, “viruses” were still classified within bacteriology, as virology had yet to establish itself as a separate field. It was André Lwoff’s redefinition of the “virus” into a unified concept that finally gave virology an identity distinct from bacteriology.

According to Sally Smith Hughes in The Virus: a History of the Concept, this development of a “more precise concept of the virus” allowed for the “unification and maturation” of virology as a scientific discipline in the mid-20th century. Only then did researchers start calling themselves “virologists” rather than parasitologists or microbiologists, and only then were the first English-language virology journals established. But did this conceptual unification—built upon decades of conflicting indirect evidence—truly elevate virology to the status of a legitimate scientific field?

For a field to be considered scientific, it must adhere to the scientific method—a process developed over centuries to systematically formulate and test hypotheses based on observations of natural phenomena. This method allows researchers to determine whether a hypothesis is presumably true or false through controlled experimentation. A field that fails to follow this methodology falls into the realm of pseudoscience—claims or practices that masquerade as science without adhering to its rigorous standards. The scientific method consists of systematic steps designed to test a hypothesis through controlled experimentation, including:

- Observation of a natural phenomenon

- Hypothesis formulation (alternate and null hypotheses)

- Identification of variables:

- Independent variable (IV) – the presumed cause

- Dependent variable (DV) – the observed effect

- Control variables – factors held constant

- Experimentation – manipulating the IV to observe changes in the DV

- Data analysis – validating or invalidating the hypothesis

According to the National Academy of Sciences, science is “the use of evidence to construct testable explanations and predictions of natural phenomena.” Scientists begin by observing the natural world, then proceed to experimentation. Crucially, explanations must be based on naturally occurring phenomena—processes or events that happen in nature without human interference. If an explanation relies on forces outside of nature, it cannot be tested, confirmed, or falsified.

Virology fails at this first step: it cannot observe “viruses” in nature. The only observable natural phenomenon is disease. No one has directly seen a “virus” enter a host and cause disease, nor witnessed person-to-person “viral” transmission in action. From the beginning, virologists have assumed the existence of “pathogenic viruses,” treating them as an idea in need of evidence—not as an observed phenomenon demanding explanation.

The core issue is the independent variable. In a valid scientific experiment, the independent variable—the presumed cause—must be directly observed, isolated, and manipulated to test whether it produces a specific effect. For virology, this would mean obtaining fully purified and isolated “viral” particles directly from a sick individual prior to experimentation.

Purification is a necessary process required to obtain the particles believed to be “viruses” free from all contaminants, pollutants, and foreign materials present in the fluids of a sick patient. This includes separating the presumed “viral” particles from host cell debris, bacteria, microorganisms, multivesicular bodies, “exosomes,” and other extracellular vesicles. Only by isolating these particles completely—leaving nothing but what is claimed to be the “virus”—could virologists validly use them as an independent variable in an experiment to test for causation.

Purification can be achieved through a combination of methods, such as:

- Centrifugation (e.g., density gradient ultracentrifugation)

- Filtration

- Precipitation

- Chromatography

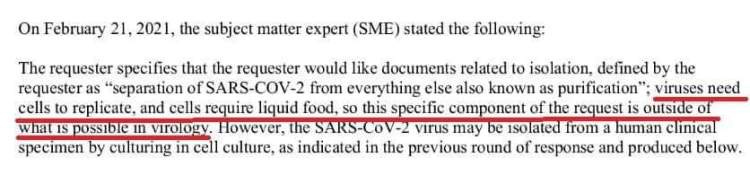

However, this is where virology faces a major problem. Although these purification techniques exist, virologists rarely apply them when claiming to have “isolated” a “virus.” In fact, many virologists openly claim that purification is either not possible or not necessary. Some common justifications include:

- There are too few “virus” particles in clinical samples to purify.

- The particles are too fragile and become damaged or lost during purification.

- “Viruses” cannot be separated from host cells because they require cell culture to replicate.

Here are a few examples of the excuses being put into action. From Luc Montagnier, discoverer of HIV:

“There was so little production of virus it was impossible to see what might be in a concentrate of virus in the gradient.”

“One had not enough particles produced to purify and characterise the viral proteins. It couldn’t be done.”

“I repeat we did not purify. We purified to characterise the density of the RT, which was soundly that of a retrovirus. But we didn’t take the “peak”…or it didn’t work…because if you purify, you damage.”

From University of Auckland associate professor and microbiologist Siouxsie Wiles in an AAP “Fact” check:

“Viruses are basically inanimate objects which need a culture to activate in. But the way they are phrasing the requests is that the sample must be completely unadulterated and not be grown in any culture – and you can’t do that,” she told AAP FactCheck in a phone interview.

“You can’t isolate a virus without using a cell culture, so by using their definition it hasn’t been isolated. But it has been isolated and cultivated using a cell culture multiple times all around the world.”

From the CDC:

As can be seen, while the methods of purification do exist, it is claimed that these methods are unsuitable for separating the assumed “virus” particles from all other material. In other words, the particles said to be “viruses” cannot be purified (freed from contaminants) or isolated (separated from everything else), and therefore cannot serve as a valid independent variable in an experiment designed to prove cause and effect.

Without an observed natural phenomenon and a valid independent variable, virology cannot even begin to formulate a proper scientific hypothesis. For example, in the case of “SARS-COV-2,” no one directly observed a “virus” entering humans from a bat, pangolin, or other animal in nature and causing respiratory disease. Likewise, no one has ever directly witnessed the measles “virus” pass from one child to another and cause a rash and fever.

At best, virologists could speculate that fluids from a sick person might contain something that causes illness in a healthy host. But to then leap to the claim that a “virus” is the cause, they would have to directly observe that “virus” in nature, isolate it from the patient’s fluids in pure form, and use it alone—without additives—in a controlled experiment to reproduce the disease. At no point have any ‘virus’ particles been purified and isolated directly from the fluids of a sick host so they could be studied and characterized prior to any experiments. This has never been done. Instead, virology relies on models, assumptions, and indirect effects—none of which substitute for direct observation of the proposed cause acting in nature. Since the presumed cause (the “virus”) is neither observed in nature nor isolated and tested as an independent variable, it remains an unverified concept, and the scientific method collapses before it even starts.

Despite being elevated as a legitimate scientific field in the late 1950s, virology ultimately relies on indirect evidence and assumptions about unnatural phenomena to support the concept of “viruses” in nature. Let’s examine some of the tricks used within virology that have contributed to the widespread belief in its scientific validity.

Satisfying Koch’s Postulates?

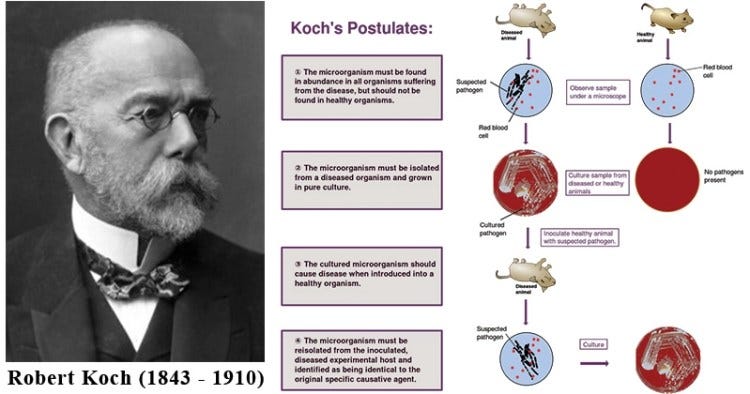

In the late 1870s, German bacteriologist Robert Koch began developing his logic-based postulates, which would eventually become the gold standard for proving that a microbe causes a disease. However, different aspects of these same logical requirements already existed before Koch. In fact, Koch’s Postulates are sometimes referred to as the Henle-Koch Postulates because a similar set of criteria had already been proposed by Koch’s teacher, German pathologist Friedrich Gustav Jacob Henle. Koch later modified and popularized them.

According to Koch’s Postulates in Relation to the Work of Jacob Henle and Edwin Klebs , Henle understood that simply finding an organism in a diseased host was not enough to establish causation. To prove the “contagious” nature of a disease, Henle recognized the necessity of isolating (i.e., separating one thing from everything else) the microbe from the host fluids and studying it independently. According to Henle, “one could prove empirically that [the organisms] were really effective only if one could isolate…the contagious organisms from the contagious fluids, and then observe the powers of each separately.” This became a major tenet of Koch’s Postulates, with Koch stating, “The pure culture is the foundation for all research on infectious disease.”

While there are slightly different ways of stating the criteria that make up Koch’s Postulates, a common structure remains across all versions. Typically, a fourth postulate—added by plant biologist E. F. Smith in 1905—is presented alongside Koch’s original three. As such, Koch’s Postulates are commonly written as follows:

Koch’s Postulates

- The microorganism must be found in abundance in all cases of those suffering from the disease, but should not be found in healthy subjects.

- The microorganism must be isolated from a diseased subject and grown in pure culture.

- The cultured microorganism should cause the exact same disease when introduced into a healthy subject.

- The microorganism must be reisolated from the inoculated, diseased experimental host and identified as being identical to the original specific causative agent.

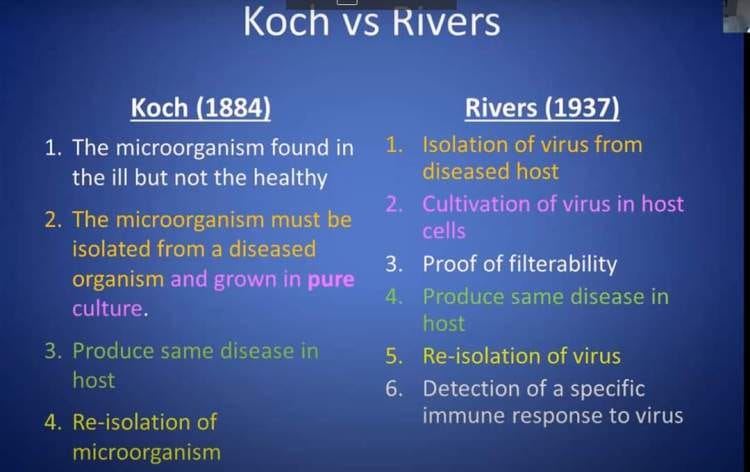

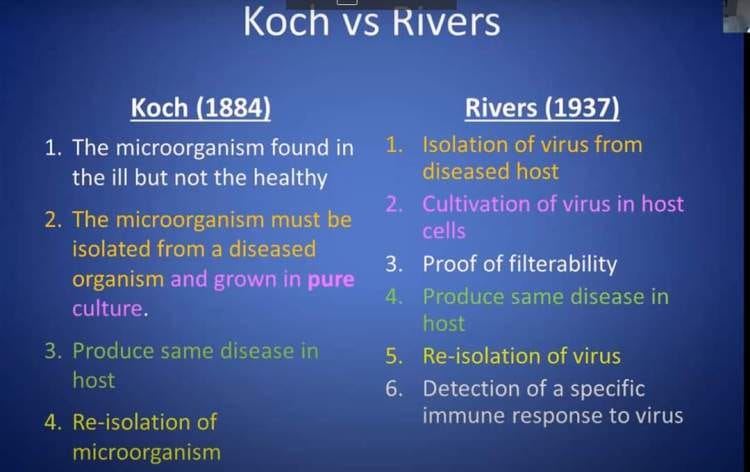

At the time, Koch’s Postulates were developed for bacteria, as “viruses” were an unknown concept and not officially “discovered” until 1892, with the tobacco mosaic “virus” in plants. Although the Postulates are logically applicable to all microbes, virologist Thomas Rivers admitted in 1937 that it was “obvious that Koch’s postulates have not been satisfied in viral diseases.” Realizing the futility of fulfilling the Postulates without direct evidence for the “virus” concept, he attempted to revise them to make them more applicable to virology. Rivers’ revisions allowed for indirect evidence and inference, such as transmission via filtered fluids and cell culture effects—yet even these weakened standards have not been fulfilled by virologists. This has led to several arguments attempting to undermine the relevance of Koch’s Postulates, including claims that:

- The Postulates are outdated.

- They were created specifically for bacteria.

- “Viruses” cannot be grown in pure culture.

- Koch himself could not satisfy his own criteria.

Even though Rivers attempted to establish criteria solely for virology to satisfy, it is widely recognized that it is Koch’s Postulates, not Rivers,’ that are the standard for proving that any microbe, including “viruses,” causes disease.

Several reputable sources affirm this, including:

- The National Institute of Health (NIH): “The rules for experimentally proving the pathogenicity of an organism are known as Koch’s Postulates.”

- The Center for Disease Control (CDC): “Koch’s postulates form the basis of proof that an emergent agent is the etiologic agent. Therefore, the interpretation should consider the successful fulfillment of each of Koch’s postulates.”

- The World Health Organization (WHO): “Conclusive identification of a causative must meet all criteria in the so-called ‘Koch’s postulate.’”

- The College of Physicians of Philadelphia: “Scientists today follow these basic principles, which we now call Koch’s postulates, when trying to identify the cause of an infectious disease.”

- The American Association of Immunologists (AAI): “What was later called Koch’s postulates is still used by scientists today when looking for causes of new diseases (such as AIDS).”

- Microbiology with Diseases by Taxonomy, written by Professor Robert W. Bauman, Ph.D.: “Koch’s postulates are the cornerstone of infectious disease etiology. To prove that a given infectious agent causes a given disease, a scientist must satisty all four postulates.”

- Virologist Ron Fouchier: “To show that it causes disease you need to fulfill Koch’s postulates.”

- Plant Pathology Fifth Edition: “Koch’s rules are possible to implement, although not always easy to carry out, with such pathogens as fungi, bacteria, parasitic higher plants, nematodes, most viruses and viroids, and the spiroplasmas.”

- Retrovirologist Peter Duesberg: “Koch’s postulates are pure logic. Logic will never be ‘out of date’ in science.”

- Ross and Woodyard: “Koch’s postulates are mentioned in nearly all beginning microbiology textbooks and they continue to be viewed as an important standard for establishing causal relationships in biomedicine.”

So why are defenders of virology so eager to discard the gold standard of Koch’s Postulates? As previously noted, the core issue is that, in order to satisfy these criteria, virologists must demonstrate that they can purify and isolate “virus” particles directly from a sick host and then establish a causal relationship through controlled experimentation. However, as we’ve discussed, this requirement cannot be fulfilled because virology, as currently practiced, circumvents the scientific method. Virologists do not purify, isolate, visualize, and characterize the alleged “viral” particles directly from the fluids of a diseased host prior to experimentation. Instead, they depend on a series of indirect, pseudoscientific methods to persuade the public that their hypothetical constructs are real.

Indirect Does Not Equal Direct

“This type of functional correlation is the only way in which the behavior of viruses may be studied. Since viruses cannot be seen directly, some indirect evidence of their presence must be forthcoming. This indirect evidence is the effect the viruses produce in animals. If no experimental animal is susceptible, then there is no way of observing the presence or absence of the virus, let alone its functional correlates with other states or conditions. Advance in the study of a virus disease is usually dependent on the discovery of a susceptible host. If that host happens approximately to reproduce the original disease, well and good. But such reproduction is not essential.”

-Lester S. King

https://doi.org/10.1093/jhmas/VII.4.350

Lester King’s earlier observation was echoed in the 2006 sixth edition of Introduction to Modern Virology, which states: “Viruses occur universally, but they can only be detected indirectly.” Because virologists cannot provide purified and isolated particles they claim are “viruses,” they lack direct proof of the existence of these entities. Direct evidence clearly and unambiguously demonstrates a fundamental fact. To compensate for this lack, virologists rely on various forms of indirect evidence to infer the presence of “viruses.”

Indirect evidence involves multiple observations that, while not proving the key claim individually, are pieced together to suggest that a “virus” exists—if the interpretations are accepted as valid. It’s not unlike how parents construct a narrative for children to believe in Santa Claus: cookie crumbs on a plate, a half-empty glass of milk, sooty footprints, and staged photographs—all indirect signs meant to convince the child of something never directly observed.

Tellingly, a 2020 historical review of early bacteriophage and virology research admitted that the kinds of indirect evidence collected during the early development of the field would not meet today’s scientific standards:

“While the quality standards of modern science impose obtaining direct evidence and visualization of the phenomena and objects studied, the methodology of science of the earlier period largely relied on making conclusions based on multistep indirect deductive reasonings, which would not be accepted as sufficiently convincing by the peer reviewers of the modern scientific journals.“

The primary forms of indirect evidence used to substitute for the unseen “virus” include:

- Cell culture and the cytopathogenic effect (CPE)

- Electron microscopy images

- “Antibody” responses

- Genomic sequences

- Animal studies

We’ll briefly examine each of these areas.

Cell Culture = “Virus Isolate?”

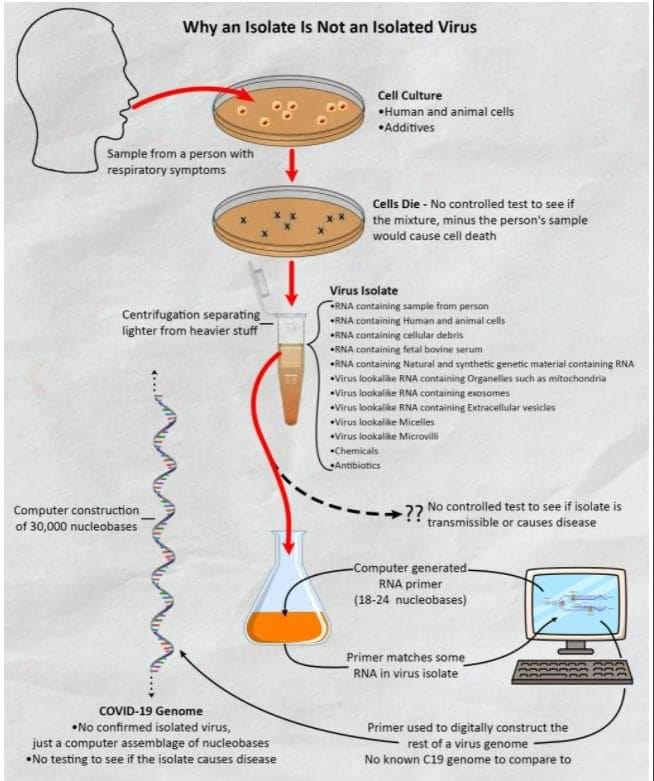

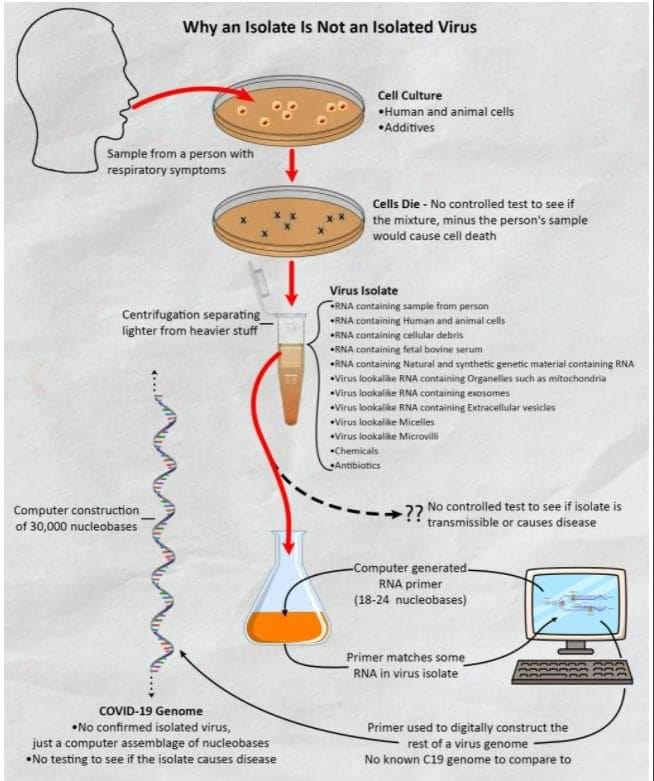

When virologists refer to isolating a “virus,” they are not talking about particles that are fully separated from everything else such as contaminants, pollutants, and other foreign materials after taking a sample from a sick host. Their definition of isolation is quite different, as explained by virologist Vincent Racaniello:

“Many of the terms used in virology are ill-defined. They have no universally accepted definitions and there is no ‘bible’ with the correct meanings.”

“Let’s start with the term virus isolate, because it’s the easiest to define. An isolate is the name for a virus that we have isolated from an infected host and propagated in culture. The first isolates of SARS-CoV-2 were obtained from patients with pnemonia in Wuhan in late 2019. A small amount of fluid was inserted into their lungs, withdrawn, and placed on cells in culture. The virus in the fluid reproduced in the cells and voila, we had the first isolates of the virus.

Virus isolate is a very basic term that implies nothing except that the virus was isolated from an infected host.”

What virologists refer to as “isolation” involves taking fluid from a sick host—fluid that contains a complex mixture of biological materials, including bacteria, host cells, other microorganisms, and unknown components. This fluid is not purified. It is then combined with various additives such as antibiotics, animal serum, and other chemicals. Despite its mixed and unpurified nature, this fluid is referred to as a “viral isolate.” Importantly, there is no verification that any “viral” particles are present in the sample before experimentation begins.

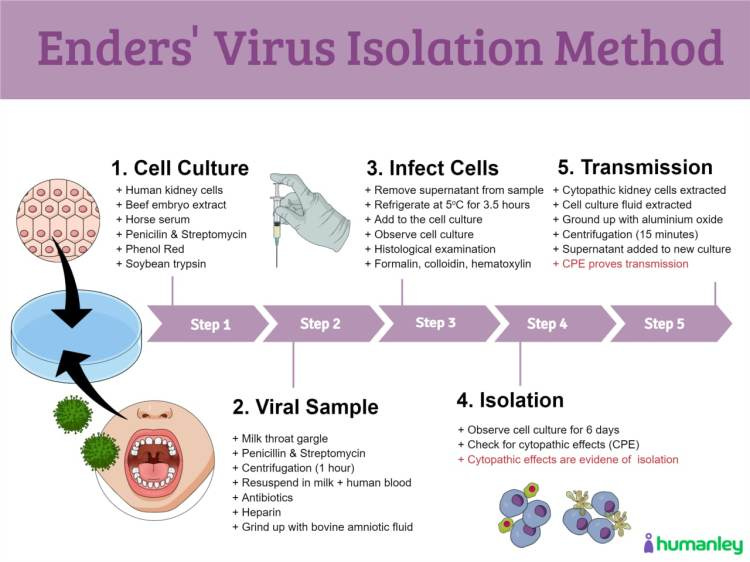

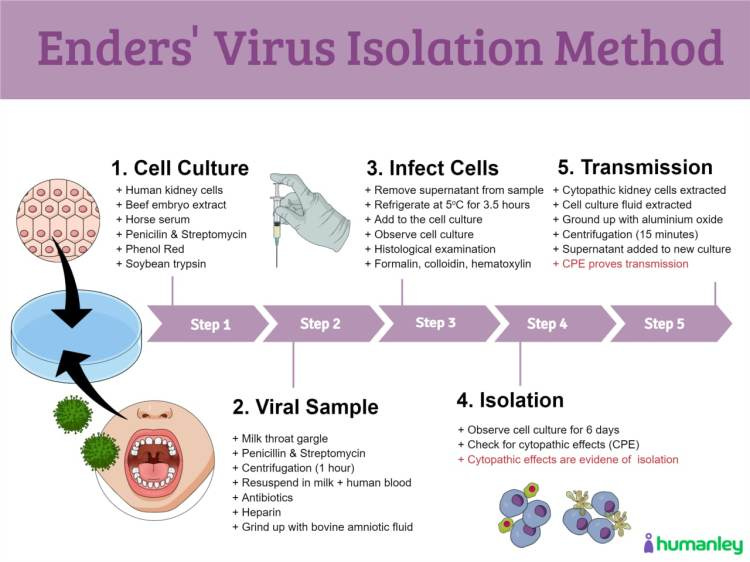

The unpurified sample is then introduced into a cell culture—an invalid experimental method established by John Franklin Enders in 1954. For instance, in his seminal measles study, Enders collected throat washings from children suspected of having measles—that were gargled in fat-free milk—and applied these to a culture of human and monkey kidney cells. He supplemented the culture with bovine amniotic fluid, beef embryo extract, horse serum, antibiotics, soybean trypsin inhibitor, and phenol red (used to monitor pH changes as a proxy for cell metabolism). The mixture was incubated for several days, and the fluids were passaged on the 4th and 16th days.

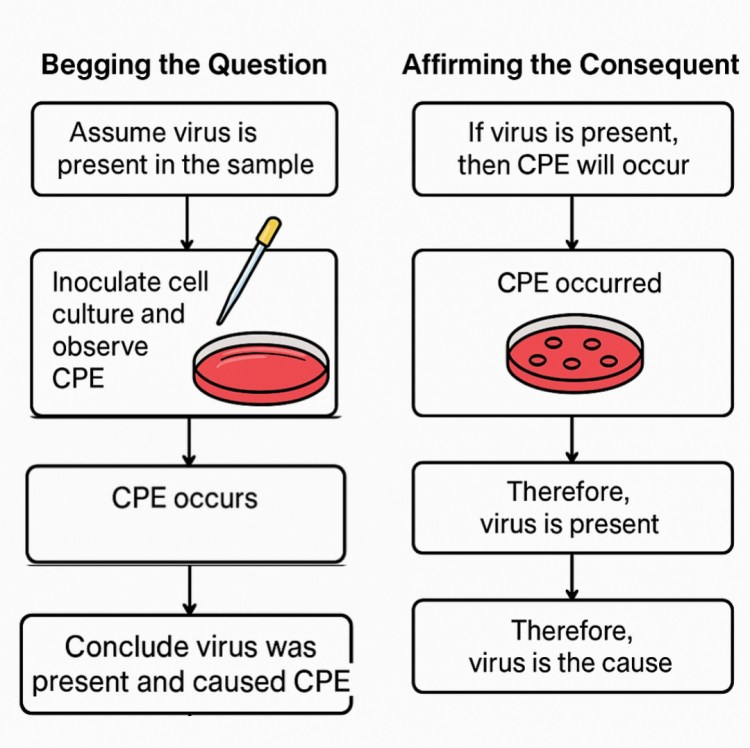

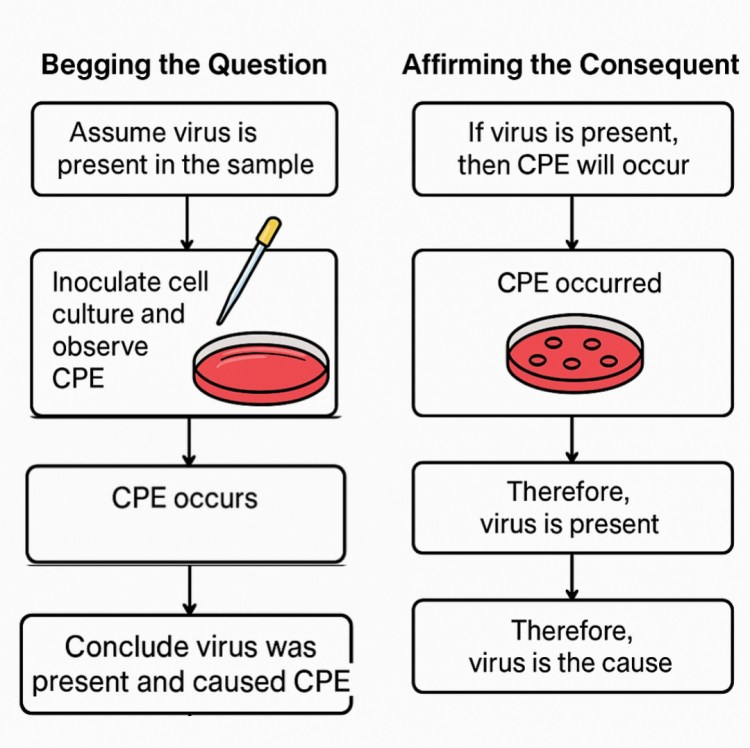

Eventually, Enders observed what is known as the cytopathogenic effect (CPE)—a visual pattern of cell breakdown and death in the culture. He interpreted this cellular damage as indirect evidence of a replicating “virus” present in the throat washings—allegedly entering the cells, hijacking their machinery, and lysing them in the process. In essence, Enders interpreted the resulting cellular debris not as the toxic outcome of a stressed and contaminated environment, but as newly formed copies of a “virus.” Ironically, he observed an identical pattern of cell death in the “uninfected” cultures, yet failed to acknowledge that this directly falsified his hypothesis that the effect was caused by a “virus.”

The resulting cell culture supernatant—containing broken cells, additives, and the original unpurified material—is what virologists refer to as the “viral isolate.” Yet at no point in this process is a “virus” actually purified or separated from the surrounding material. Despite this, the term “isolate” is used as if something was actually isolated.

This same process of adding unpurified fluids to starved and poisoned cells, usually taken from African green monkey kidneys, aborted human fetuses, or human cancerous cell lines, is still used by virologists today. They incubate their unpurified mixtures for days, looking throughout for CPE as indirect evidence that a “virus” is present. However, a few critical problems arise from this approach:

- CPE is an artificial, lab-created phenomenon that does not occur in nature.

- The CPE observed in the cell culture is not specific to “viruses;” many other factors can cause similar effects, such as bacteria, amoebas, parasites, antibiotics, antifungals, chemical contaminants, age-related cell deterioration, and environmental stress.

- The mixture of unpurified fluids, multiple contaminants, and foreign substances directly contradicts the concept of purification and isolation, which is essential for identifying a single entity.

This entire process is steeped in fallacious logic. It begins with circular reasoning: the presence of a “virus” is assumed from the outset, and that same assumption is used to interpret the results of a method that cannot independently verify it. Researchers presuppose the existence of a “pathogenic virus,” then interpret certain phenomena as evidence of that entity—using the assumed effect to prove the presumed cause. Rather than providing independent evidence for the “virus’s” existence, this reasoning simply presupposes what it sets out to demonstrate.

This is the foundational flaw in virology as a scientific discipline. The very entity assumed to cause an artificial, lab-induced effect is never directly proven to exist prior to the experiments designed to test its effects. But it is essential that the cause—the presumed “virus”—be demonstrated to exist before the effect. This is known as time order, the principle that a cause must precede its effect, and it is a necessary condition for establishing a causal relationship between two variables.

Virology also relies on the fallacy of affirming the consequent: treating an observed effect (such as cell damage) as proof of the presumed cause (a “virus”). In cell culture experiments, this occurs when virologists claim that the observation of CPE in an “infected” culture confirms the presence of a “virus.”

- If a “virus” is present in the sample, CPE will occur.

- CPE is observed.

- Therefore, a “virus” is present.

This line of reasoning is fallacious for several reasons—chiefly, because the effect (CPE) cannot serve as proof of the cause (a “virus”) without first establishing the cause exists. One cannot validly infer the existence of an unproven entity solely from an effect that may have many other explanations.

This leads directly to the false cause fallacy—assuming, without evidence, that one thing causes another. Any time someone claims “A causes B” without ruling out alternative explanations, they commit this fallacy. It’s often summed up in the phrase, correlation does not equal causation. The mere occurrence of an effect alongside an assumed cause does not prove a causal relationship.

In a revealing 1954 paper titled Cytopathology of Virus Infections: Particular Reference to Tissue Culture Studies—published the same year as his influential measles study—John Enders admitted that CPE could be triggered by many agents aside from “viruses.” He acknowledged that cytopathic effects are influenced by numerous factors, both known and unknown. These include the age and type of donor tissue, the culture conditions, and environmental variables. In other words, the effect virologists attribute to a “virus” may have nothing to do with one at all.

This undermines the entire approach. The cell-cultured supernatant cannot function as an independent variable in accordance with the scientific method because it is an undefined mixture—a “toxic soup”—containing countless unknowns. Any one of these components, including the experimental conditions themselves, could be responsible for the observed cytopathic effects, which are artificial, lab-induced phenomena serving as the dependent variable—not natural effects observed in real-world conditions. Moreover, no “virus” is ever directly observed in the culture—only the nonspecific cellular damage.

If the cell culture method cannot prove that a “virus” is present, cannot isolate it from the mixture, and cannot distinguish a “viral” effect from other potential causes, then it cannot be used to confirm the existence of a “virus,” let alone prove it causes disease. Nor can it serve as a valid test of any hypothesis, since it fails to reflect a naturally occurring phenomenon.

The technique widely used in virology fails to meet essential requirements of the scientific method—and no amount of assumption or interpretation can overcome that failure. Virologists are not isolating “viruses.” They are manufacturing conclusions in a dish.

Electron Microscopy: Point and Declare

Many people believe that the existence of “viruses” is proven by electron microscope (EM) images. However, this is one of the great deceptions of virology. Before the invention of the electron microscope in 1931, “viruses” were invisible to light microscopy and remained largely conceptual. As virologist Karen Scholthof acknowledged, for decades, “viruses” remained “outside the bounds of understanding. We couldn’t see them.”

With the electron microscope—capable of magnifying up to 50,000 times—scientists could now visualize particles that had previously existed only as theoretical constructs. As one observer put it, this instrument gave scientists “the power to actually see as individual objects things that had been only mental concepts.”

This technological leap marked a paradigm shift. Until then, “viruses” were identified primarily through indirect association with disease. Now, classification would rest on morphology—the shape and structure of particles observed via EM. Historian Tor Van Helvoort underscored the significance of this change, noting that virology didn’t fully break away from bacteriology or gain status as an independent discipline until “the first viruses were made visible.”

But a critical problem underpins this approach: the assumption that the particles identified are indeed “pathogenic viruses.” The first EM images—those captured by Helmut Ruska in 1938—were of particles selected based on their size, inferred from filtration studies. If a diseased sample contained particles not seen in a healthy one, those particles were simply presumed to be the “virus.” Yet this presumption was never validated by the scientific method—specifically, not through Koch’s Postulates or any rigorous demonstration that the particles caused disease.

As discussed previously, virologists do not purify or isolate these particles directly from the fluids of sick individuals. In early studies, unpurified tissue samples were imaged, and arbitrarily chosen particles were designated “viruses.” Their visual features became the morphological template for identifying future “viruses.” Helmut Ruska and others simply selected shapes that matched their expectations. These subjective judgments, built on assumption rather than scientific proof, laid the foundation for modern virology.

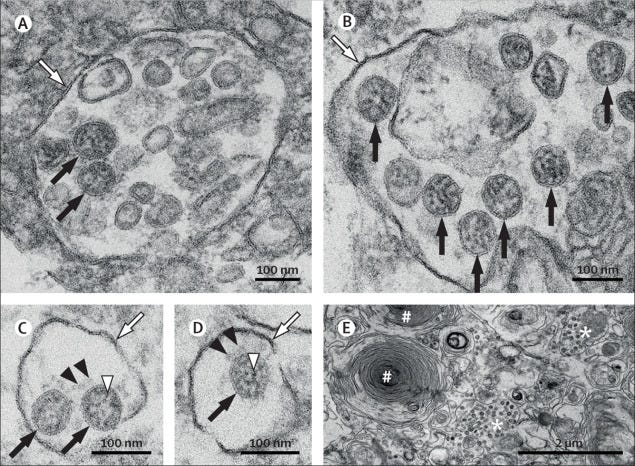

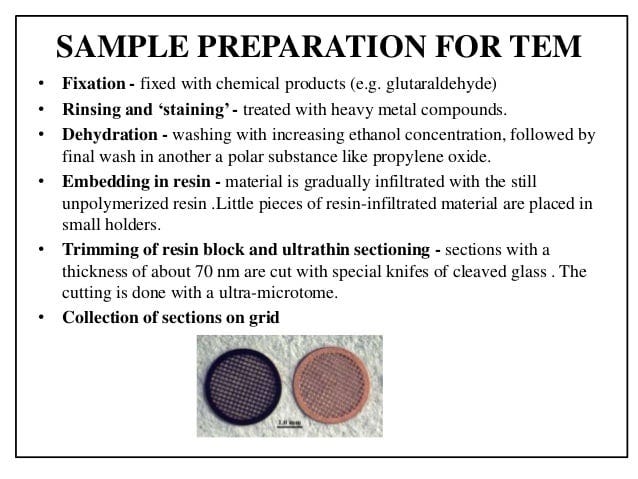

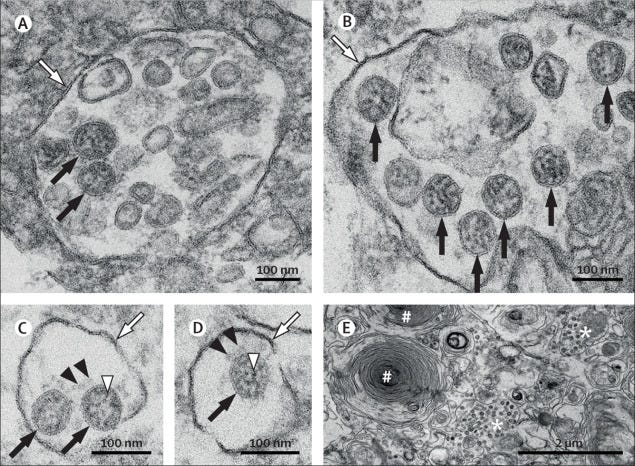

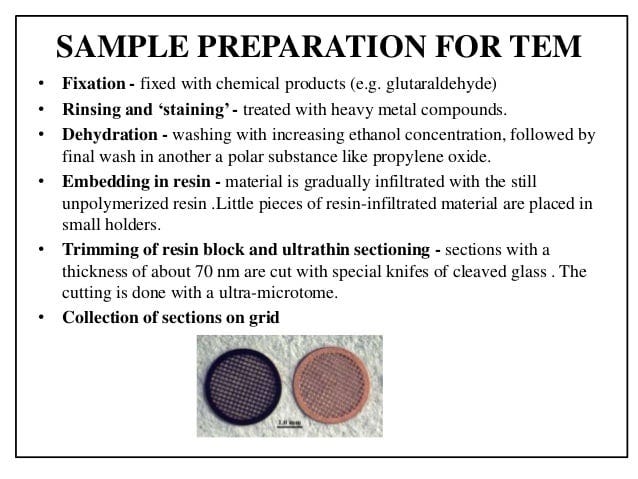

This unscientific pattern continues today. Virologists take the supernatant—the top layer of liquid from an unpurified, chemically manipulated cell culture—and subject it to a series of destructive, distorting steps to prepare it for EM imaging.

- Fixing (killing) with glutaraldehyde or paraformaldehyde

- Staining with heavy metals

- Dehydration in graded alcohols

- Embedding in epoxy resin

This elaborate process introduces numerous variables and distortions, raising a crucial question: how much of what is seen in the final image actually reflects reality?

Microbiologist Harold Hillman argued that many of the structures seen in cells via electron microscopy are artifacts—products of the preparation process itself rather than real features of living tissue. He described just how far the sample must travel before becoming an image in a textbook:

“For example, most cytologists know, but readers of elementary textbooks do not, that when one looks at an illustration of an electron micrograph: an animal has been killed; it cools down; its tissue is excised; the tissue is fixed (killed); it is stained with a heavy metal salt; it is dehydrated with increasing concentrations of alcohol; it shrinks; the alcohol is extracted with a fat solvent, propylene oxide; the latter is replaced by an epoxy resin; it hardens in a few days; sections one tenth of a millimetre thick, or less, are cut; they are placed in the electron microscope, nearly all the air of which is pumped out; a beam of electrons at 10,000 volts to 3,000,000 volts is directed at it; some electrons strike a phosphorescent screen; the electron microscopists select the field and the magnification which show the features they wish to demonstrate; the image may be enhanced; photographs are taken; some are selected as evidence. One can immediately see how far the tissue has travelled from life to an illustration in a book.”

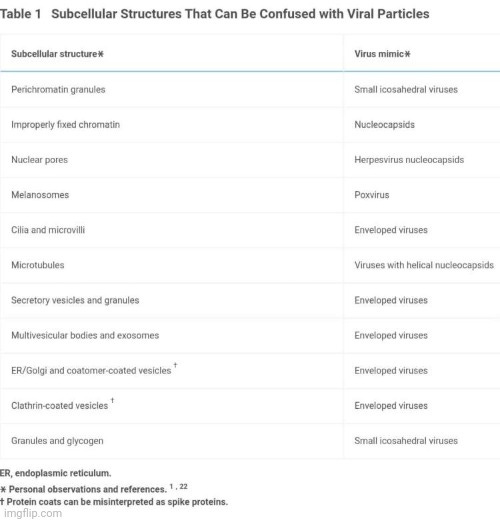

By the end of this process, the original biological material has been radically transformed—not just by the culturing conditions, but by the preparation process itself. The result is a soup of particles, none purified, none proven to originate from a sick host. From this dead debris, a technician selects a particle—indistinguishable from cellular vesicles, “exosomes,” or breakdown products—and labels it a “virus.” This identification rests not on evidence, but on interpretation and assumption.

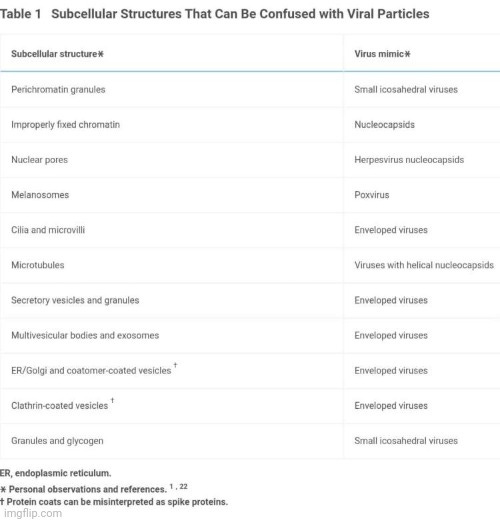

Compounding the problem, particles classified as normal cellular structures—such as multivesicular bodies, clathrin-coated vesicles, and rough endoplasmic reticulum—are regularly mistaken for “viruses,” and vice versa. These same “virus-like” particles have been observed in both sick and healthy samples, in cultured cells, and even in fetal bovine serum used as growth media. When such particles appear in the “wrong” context—where “viruses” aren’t expected—they are dismissed as debris or reclassified as “exosomes.” The categorization shifts to suit the narrative.

Worse still, the logic underlying this process is circular: the existence of the “virus” is assumed from the outset, and the appearance of a particle is then taken as confirmation of that assumption. This is a textbook case of begging the question. Without purification, without functional testing, and without direct causal evidence, these images are not scientific proof—they are visual reinforcements of an unproven idea.

Far from offering a true glimpse into the invisible world of “viruses,” they are distortions—products of expectation, not observation. In the end, EM images in virology do not demonstrate the existence of pathogenic “viruses.” They serve only to illustrate a belief system built on illusion and assumption.

Antibodies: Does One Fictional Entity Prove Another?

Another indirect trick virologists frequently rely on is the supposed specificity of “antibodies”—proteins claimed to react with antigens, or substances deemed foreign, such as toxins, proteins, peptides, or polysaccharides. The prevailing pseudoscientific hypothesis asserts that each “antibody” binds exclusively to a specific antigen, like a lock and key. We’re told these “antibodies” exist in our bodies, battling “pathogenic viruses” and restoring health. In the lab, “antibodies” are used to claim the presence of a specific “virus” and to assert “immunity,” whether from vaccination or natural “infection.”

But what most people don’t realize is that, like “viruses,” “antibodies” have never been purified or isolated directly from human fluids. They remain entirely hypothetical, never directly observed. As with “viruses,” the concept of “antibodies” emerged in the late 19th century from researchers who inferred their existence from artificial laboratory experiments, not biological observation.

In 1890, Koch’s pupil Emil von Behring hypothesized the existence of “antibodies” based on lab-induced effects—not any verifiable, naturally occurring substance. His experiments involved injecting animals with chemicals like iodine trichloride and zinc chloride to neutralize “bacterial toxins.” He interpreted the resulting effects as “immunity,” even though he admitted this “immunity” was temporary and could vanish under certain conditions. Behring’s lab partner, Shibasaburo Kitasato, thought they had merely demonstrated habituation to toxins—a well-known phenomenon in which organisms adapt to increasing doses of a harmful substance over time.

Despite differing interpretations, German physician Paul Ehrlich built on Behring’s ideas, transforming what Behring saw as a chemical process into the claim of discrete molecular entities. In 1891, he coined the term “antibody” and developed elaborate models of how these unproven entities supposedly worked. His interpretations, based on artificial manipulations and speculation, lacked direct evidence—but they gained traction and laid the foundation for modern immunology.

Ehrlich’s reasoning begged the question—he presupposed that “antibodies” were real, chemically distinct substances before proving their existence. This assumption was heavily contested by some of Ehrlich’s contemporaries who stated that his depictions were fictional and fundamentally misleading, warning they should be discarded as they did not reflect biological reality. But these criticisms were ignored. In the decades that followed, at least six conflicting theories emerged to explain the structure and function of these hypothetical agents:

These competing models reflect deep uncertainty over what “antibodies” actually are—if they exist at all. Yet virologists continue to use “antibody” tests to assert the presence of specific “viruses,” relying on indirect chemical reactions to claim proof of both. Common examples include:

- Neutralization assays

- Hemagglutination inhibition tests

- Complement fixation tests

- Immunoassays

- Immunoblotting

- Lateral flow tests

What virologists often omit is the fact that what are claimed to be “antibodies” are nonspecific—they are frequently said to bind to proteins that aren’t their intended target. For example, according to their own studies, “antibodies” for “SARS-COV-2” have been shown to bind to a wide range of substances, including, but most likely not limited to:

- “Viruses:” Other “coronaviruses,” Herpes, Influenza, Human “papillomavirus” (HPV), Respiratory syncytial “virus” (RSV), “Rhinoviruses,” “Adenoviruses,” “Poliovirus,” Mumps, Measles, Ebola, “HIV,” Epstein-Barr “virus,” “Cytomegalovirus” (CMV)

- Bacteria: Pneumococcal bacteria, E. faecalis, E. coli, Borrelia burgdorferi (the bacterium associated with Lyme disease)

- Parasites: Plasmodium species (Malaria), Schistosomes

- Vaccines: DTaP, BCG, MMR

- Foods: Milk, Peas, Soybeans, Lentils, Wheat, Roasted almonds, Cashews, Peanuts, Broccoli, Pork, Rice, Pineapple

The lack of specificity of “antibodies” and the unreliability of “antibody” tests is a well-documented issue in the mainstream literature. The “antibodies” sold to laboratories vary in quality and differ significantly between batches, leading to erroneous and false results. The reliability of “antibody-based” research is further compromised by the inability to reproduce or replicate results consistently, which has significantly contributed to the ongoing reproducibility crisis in scientific research.

At the core of this issue is the fact that both “antibodies” and “viruses” remain unproven constructs. Virologists use belief in one to prop up belief in the other, perpetuating a cycle of unverified assumptions. The circular reasoning is critical: the presence of “antibodies” is used to suggest the presence of a “virus,” while the assumption that “antibodies” only appear in response to “viral infection” remains unproven. In turn, the presence of a “virus” is taken as confirmation of the “antibodies,” reinforcing belief in both without any direct, objective evidence. This self-reinforcing loop gives the illusion of evidence—without ever verifying the existence of either entity.

“Viral” Genomes: Assembling Arbitrary A, C, T, Gs of Unknown Origin in Silico

“Today virology is in danger of losing its soul, since viruses now show a strong tendency to become sequences…Moreover, and it is the direct result of an abundance of discoveries, the very concept of virus wavers on its foundations.”

-Andre Lwoff

Click to access alfred-grafe-a-history-of-experimental-virology-transl-elvira-rechendorf-berlin-new-york-and-london-springer-verlag-1991-pp-xi-343-dm-9800-3-540-51925-4.pdf

With the rise of molecular virology, genomics has played an ever-increasing role in the “viral” delusion. The advent of PCR in the 1980s led to the use of the DNA Xerox machine becoming a makeshift test to detect “viruses” based off of fragments from their genomes. However, as was clearly demonstrated during the “Covid-19 pandemic,” PCR is highly inaccurate and unsuitable for this purpose. What’s also evident is that genomes themselves are entirely unreliable, as virologists are unable to sequence the exact same genome every time. At the time of this writing, there are nearly 17 million variants of the same “SARS-COV-2 virus” running around.

Why is this the case? Setting aside the equally flawed foundations of the DNA paradigm for a moment, let’s address this hypothetically. If someone claims to have a “viral” genome, they must first possess the “virus” itself to extract its genetic material. For example, if I claim to have sequenced the genome of a dog, I would need to start with an actual dog—and ideally, have living specimens to confirm that the genome is both accurate and biologically meaningful. Without direct access to the entity in question, the genomic claim becomes baseless, and the entire premise collapses.

As discussed before, virologists are unable to purify and isolate the particles they claim are “viruses,” so the resulting genome comes from unpurified mixtures of RNA and DNA, including genetic material from humans, animals, bacteria, and other microorganisms. There is absolutely no way to tell where the genetic material is coming from nor whether it belongs to a single source, especially when sequenced from unpurified cell culture supernatant.

Even the WHO warned that passaging genetic material through cell cultures can introduce artificial mutations not present in the original sample, which can compromise subsequent analyses. They specifically advised against using cell culture “solely for the purpose of amplifying virus genetic material for SARS-CoV-2 sequencing.” Nonetheless, virologists continue to assemble hypothetical genomes—digital sequences of A, C, T, and G—claimed to represent a “virus” that has never been directly observed.

The so-called reference genome utilized to verify a newly assembled “viral” genome is not a sequence from a purified “viral” particle, but a consensus model—a stitched-together average built from multiple inconsistent and unverified samples. This process assumes the very thing it sets out to prove: that a coherent “virus” genome exists. The result is circular logic disguised as scientific progress.

To justify these digital constructs, virologists compare new models to old ones already stored in databases—sequences built on the same flawed assumptions. For example, the first “viral genome,” attributed to bacteriophage Φ-X174, was not derived from purified particles. Its sequence may have been nothing more than a patchwork of unrelated fragments, but it became a precedent that allowed decades of downstream error. The practice of validating present genomes by comparing them to past ones creates a closed loop, where consistency is mistaken for accuracy, allowing for the reinforcement of the original errors.

The proliferation of millions of so-called “variants” further undermines the claim of a stable, identifiable “virus.” It also raises a critical question: how can one entity possess so many divergent genomic representations and still be considered the same thing? The theory becomes unfalsifiable when any deviation from the expected genome is labeled a “mutation” or “variant,” allowing any result to be reinterpreted to fit the narrative, no matter how inconsistent, rather than to challenge it.

I previously analyzed the CDC’s protocol for constructing “viral” genomes, highlighting numerous ways contamination and other factors can affect the final product. Technological limitations further complicate the process. The fact that there are numerous processing steps the samples must go through during the creation of a genome—each introducing alterations, artifacts, distortions, and errors—makes it easy to see that the final “viral genome” is nothing but a meaningless, indirect, and fraudulent representation of a non-existent entity.

Even virologists have acknowledged the problem. In 2001, Charles Calisher cautioned that detecting nucleic acid “is not equivalent to isolating a virus.” He warned against the “wholesale takeover by modern lab toys,” noting that “a string of DNA letters in a data bank” tells us little about how “viruses” work. Studying sequences alone, he said, is like “trying to say whether somebody has bad breath by looking at his fingerprints.”

Edward R. Dougherty, Scientific Director of the Center for Bioinformatics and Genomic Systems Engineering, echoed similar concerns. In a 2008 paper, he described an “epistemological crisis” in genomics. He warned that modern genomics often fails to meet the basic requirements of scientific method and epistemology. “The rules of the scientific game are not being followed,” he wrote. Accumulating large amounts of data may seem impressive, but data alone does not equal science. Dougherty stressed that contemporary genomic research often produces invalid knowledge, failing to qualify as true science.

This reliance on computer-generated genomes—standing in for an entity never shown to exist in a purified and isolated state—has led to the use of fragments from this fraudulent RNA assembly of unknown provenance as a means of detection via PCR. The results have been disastrous. Vast numbers of people have been tested and diagnosed with a computer-constructed “virus,” despite being entirely free of disease. Entire populations have been locked down, quarantined, and treated for something that exists only in silico, not in nature. There is no scientific evidence linking the A, C, T, and G sequences in digital databases to the unpurified particles selected in electron microscope images. In the end, it is pseudoscientific fraud—generated by computers and accepted by consensus.

Proof of Pathogenicity?

The most horrific aspect of the indirect methods used to claim the existence of “pathogenic viruses” is the grotesque and senseless torture of animals in the pursuit of so-called evidence. In one infamous experiment, holes were drilled into the skulls of monkeys so that emulsified spinal cord tissue from a paralyzed 9-year-old boy could be injected directly into their brains. This was heralded as “proof” that polio causes paralysis. In another case, rabbits were scraped with sandpaper, then had toxic emulsions of ground-up wart tissue rubbed into their open wounds to “demonstrate” the existence of the so-called “papillomavirus.” These same rabbits were also injected with the concoction into their veins, stomachs, subcutaneous fat, testicles, and brains.

In yet another disturbing example, rabbits had their eyes deliberately scarified with scalpels so that a similar toxic mixture—allegedly containing the varicella-zoster “virus” (chickenpox/shingles)—could be introduced. Once again, they were subjected to injections into every possible site, including their testicles. These experiments are not rare outliers—they are standard practice within virology literature. Many ended in failure, producing no disease and no meaningful conclusions—only dead and mutilated animals sacrificed for the sake of a pseudoscientific narrative.

Crucially, none of these experiments involved purified or isolated “viral” particles. The injection mixtures typically consisted of unfiltered, decomposing biological slurries—tissue from previously killed animals, ground up, mixed with chemicals, and applied through wholly unnatural routes. These studies prove only that poisoning an animal with foreign biological material can cause harm—not that a specific, “infectious” agent causes a specific disease.

Why do virologists resort to such horrific lengths? Because the more honest, direct, and scientific approach—demonstrating natural, person-to-person transmission—has failed, repeatedly and embarrassingly. During the so-called “Spanish flu” pandemic of 1918, U.S. Navy doctors conducted rigorous transmission experiments on healthy volunteers. They sprayed bacteria-laden fluids from flu patients into volunteers’ noses and eyes, injected blood from the sick into their veins, and even had them inhale the breath and coughs of actively ill individuals. Not a single one of the volunteers got sick.

“We entered the outbreak with a notion that we knew the cause of the disease and were quite sure we knew how it was transmitted from person to person. Perhaps, if we have learned anything, it is that we are not quite sure what we know about the disease.”

—Dr. Milton Rosenau, Journal of the American Medical Association, 1919

Similar studies at Angel Island in San Francisco in early 1919 yielded the same result: the “deadliest virus” in history could not be passed from person to person under controlled experimental conditions. Decades of human challenge trials continued to produce the same confounding outcome: no consistent, repeatable transmission of illness from sick humans to healthy ones.

Examples include:

- 1919, McCoy et al.: 8 experiments on 50 men—all failed. 0/50 became sick.

- 1919, Wahl et al.: 3 studies attempting to infect 6 healthy men using mucus and lung tissue—0/6 became ill.

https://www.jstor.org/stable/30082102

- 1920, Schmidt et al.: Among 196 people exposed to secretions, only 10.7% developed colds, while 18.6% of the saline-injected controls did—a higher illness rate among the placebo group.

THE ETIOLOGY OF ACUTE UPPER RESPIRATORY INFECTION (COMMON COLD) - PubMed

- 1921, Williams et al.: Tried to infect 45 men with influenza and colds. 0/45 became ill.

THE ETIOLOGY OF ACUTE UPPER RESPIRATORY INFECTION (COMMON COLD) - PubMed

- 1924, Robertson & Groves: 100 healthy individuals exposed to bodily secretions from flu patients—0/100 became ill.

https://academic.oup.com/jid/article-abstract/34/4/400/832936

- 1930, Dochez et al.: One subject only developed symptoms after mistakenly being told he received the actual filtrate—a textbook case of the nocebo effect.

STUDIES IN THE COMMON COLD : IV. EXPERIMENTAL TRANSMISSION OF THE COMMON COLD TO ANTHROPOID APES AND HUMAN BEINGS BY MEANS OF A FILTRABLE AGENT - PubMed

- 1937, Burnet & Lush: Exposed 200 healthy individuals—0/200 became ill.

Influenza Virus on the Developing Egg: VII. The Antibodies of Experimental and Human Sera - PMC

- 1940, Burnet & Foley: Another failed attempt on 15 students to induce influenza.

https://onlinelibrary.wiley.com/doi/abs/10.5694/j.1326-5377.1940.tb79929

- 1946–1989, The Common Cold Unit (UK): Over 20,000 volunteers exposed to cold “viruses.” The inability to reliably induce illness eventually led to the unit’s shutdown. Virologist Christopher Andrewes remarked, “You shouldn’t be interested in why volunteers given viruses get colds, but why so many don’t!” The Infectious Myth Busted Part 7: The Common Cold Vacation – ViroLIEgy

Faced with these repeated, damning failures, virology abandoned attempts to demonstrate “natural infection” and instead turned to highly contrived and artificial methods. Human challenge trials became rare and tightly manipulated. In their place, researchers sought refuge in animal torture—inflicting elaborate and grotesque procedures in the name of “proof.” More recently, so-called “SARS-COV-2” human trials have returned—but these involve inoculating volunteers with lab-grown cell culture fluids, not purified “viral” particles taken directly from the sick host, delivered directly into their nasal passages using nose clips to “ensure infection.” This is no more natural, ethical, or scientifically valid than the animal trials it attempts to replace. Regardless, these attempts have all failed to produce the intended results.

There is no solid proof that purified, isolated “viruses” cause disease. There is no demonstration of natural contagiousness. What we do have is a legacy of ritualized poisoning—of animals and now humans—in a desperate attempt to prop up a crumbling “theory.” Virology has replaced science with spectacle, swapping logic and evidence for cruelty, fear, and digital fabrication.

Piecing The Puzzle Together

Hopefully, it’s now clear that virology does not follow the scientific method. It lacks a valid independent variable—specifically, purified and isolated particles directly obtained from the fluids of a sick human—which is essential for establishing cause and effect through experimentation. Without this, there is no direct evidence that any so-called “virus” has ever been inside a human. And without that, there can be no fulfillment of Koch’s Postulates, the logic-based criteria required to prove that a specific microbe causes a specific disease. The logical chain of causation has yet to be demonstrated.

To establish microbial causation scientifically, the following must occur:

- The microbe actually exists directly in the fluids of sick hosts but not in the fluids of healthy hosts.

- The specific microbe is identified, purified, and isolated as a valid independent variable prior to experimentation (known as time order: the cause must exist before the effect).

- The microbe is introduced into a healthy host in the manner proposed by the hypothesis (via aerosolization, ingestion, etc.) as the mode of “infection.”

- The specific disease associated with the microbe is reproduced following this introduction.

- The disease is transmissible from a sick host to a healthy host in the hypothesized manner (e.g., through close contact, coughing, sneezing, etc.).

- After transmission, the same microbe can be purified and isolated from the fluids of the newly sickened host and confirmed.

- This process must be repeated with a large sample size with proper control experiments, and the results must be independently reproduced by other researchers.

Instead of meeting these scientifically and logically necessary criteria, virology relies on flawed, indirect methods that do not establish causation. These include:

- Cytopathic effects observed in cell cultures, which are not specific and often occur without any presumed “virus” present.

- PCR amplification of genetic fragments from unpurified, mixed samples, which cannot prove the presence of whole, replicating “viral” entities.

- Electron microscopy images of presumed “viral” particles, typically taken from impure and heavily manipulated material.

- Antibody detection (such as ELISA or neutralization tests), which assumes prior exposure to a “virus” without directly proving its existence, let alone causation. These tests are indirect by nature, prone to cross-reactivity, and often interpret correlation as proof of “infection.”

None of these techniques logically or scientifically demonstrate causation. At best, they produce associations based on assumptions—assumptions that are rarely, if ever, tested using proper control experiments. In this way, the indirect evidence used in virology supports the story of the “virus” much like a parent supports the story of Santa Claus: footprints by the fireplace, missing cookies, and gifts under the tree all serve to reinforce the narrative, not to prove it. These signs are consistent with the tale, but they are not scientific proof of the character’s existence. Likewise, cytopathic effects, PCR fragments of presumed “viral” genomes, electron microscopy images, and “antibody” tests may align with the pseudoscientific “virus” hypothesis—but they do not confirm it. They support the narrative, not demonstrate the cause.

Real science demands controlled, falsifiable experiments to test hypotheses based upon observed natural phenomena. In virology, the absence of purified input material, valid controls, and directly testable hypotheses makes falsification impossible. This leaves the field vulnerable to circular reasoning, where the presence of disease is taken as proof of the “virus,” and the “virus” is assumed to be the cause of the disease. As a result, the “virus” remains scientifically unproven—an idea, not a demonstrated fact—and virology becomes pseudoscience masquerading as legitimate science.

Navigating the Site.

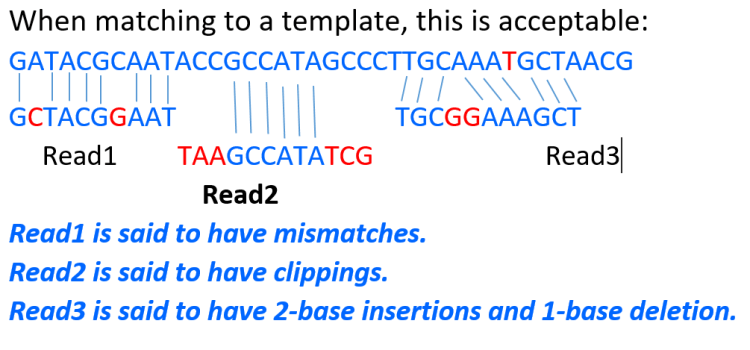

To help you better understand and explore the papers referenced on this site, I want to briefly show you how to use the links properly. Below any paper I cite in an article, you’ll usually find a link to the full text. If the paper is behind a paywall, a DOI number will typically be listed at the end—this can be copied and searched using tools like Sci-Hub.se to access the full article.

In my articles it will look like this:

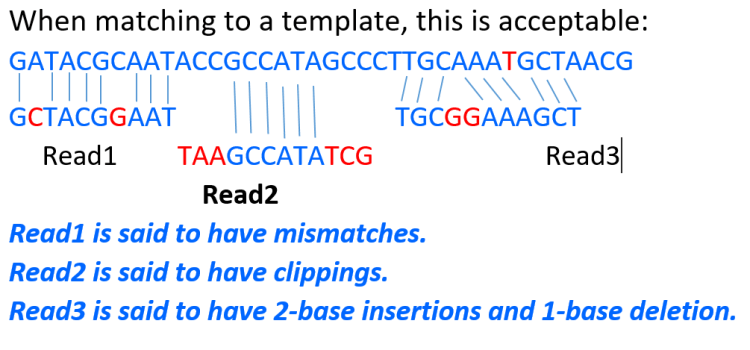

In a study, it will normally appear like this: